Teleport Blog - How we improved SSH connection times by up to 40% - Aug 29, 2023

How we improved SSH connection times by up to 40%

At Teleport we provide secure access to our customers’ infrastructure adding passwordless SSO, session recording and audit for every connection. Every day our customers login into their clusters and connect to their infrastructure. We weren’t happy with how long it took ssh to establish connections to target hosts when going through Teleport proxy. We set out on a mission to identify and eliminate any excess overhead to make the connections as fast or faster than connecting to servers directly.

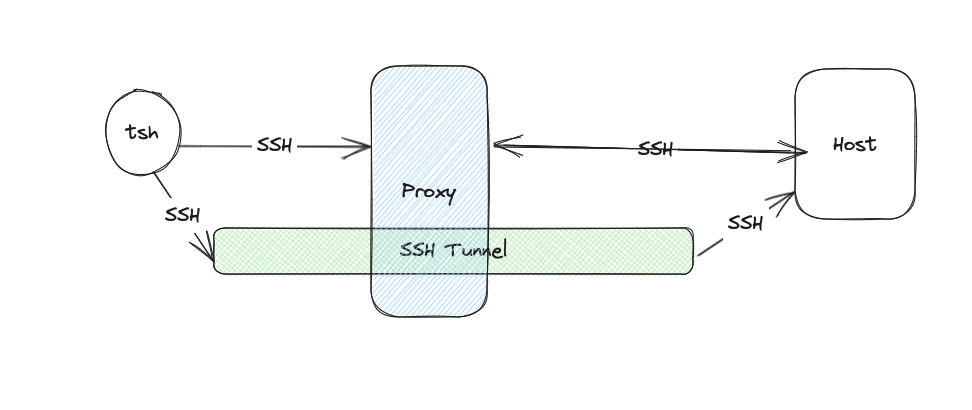

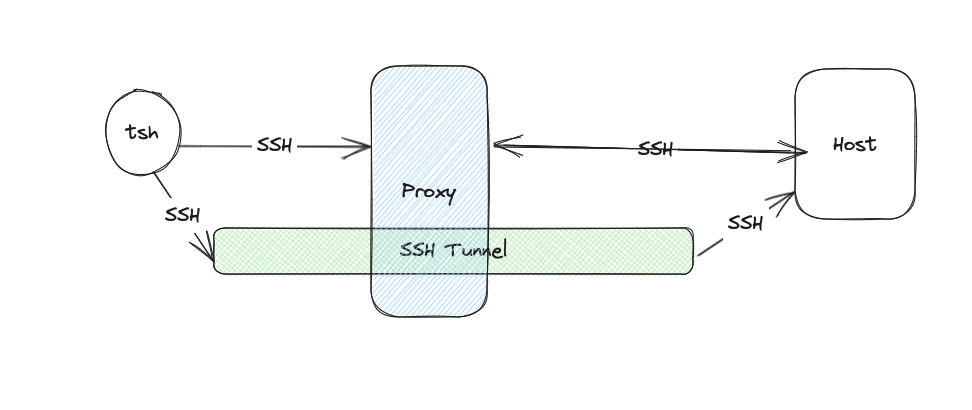

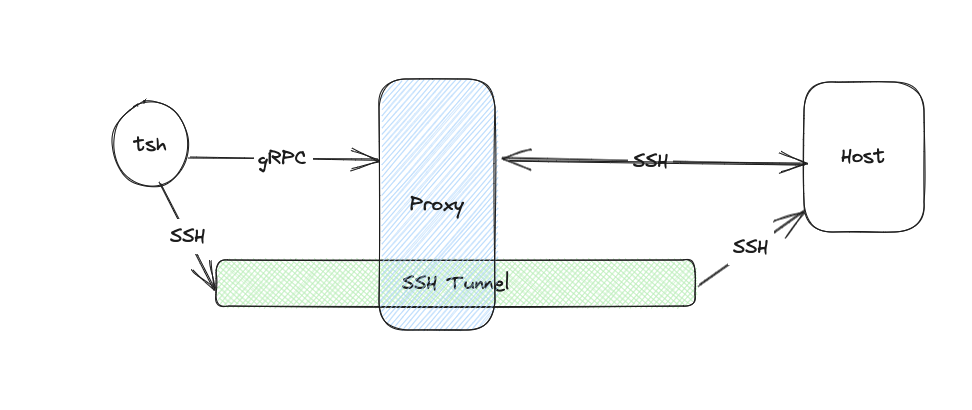

How tsh ssh connections work

When using Teleport, all connections — including SSH — are routed through the identity-aware proxy that only supports certificate-based authentication and performs additional checks, e.g. whether the device is trusted and whether MFA is used. We support SSH protocol natively, and also provide a utility client, tsh. The command tsh ssh first opens an SSH connection to the proxy which performs access checks. Then the proxy opens a connection to the target on behalf of the user and proxies that connection over an SSH channel back to tsh while recording the session.

Identifying latency

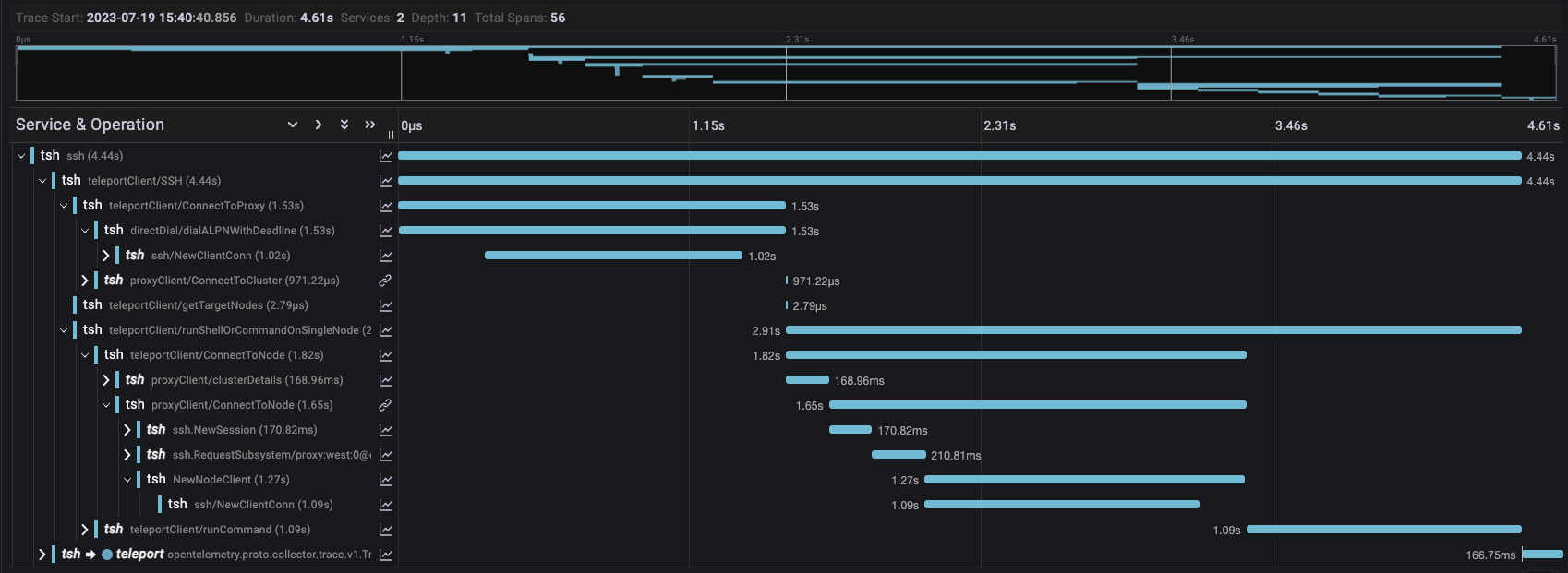

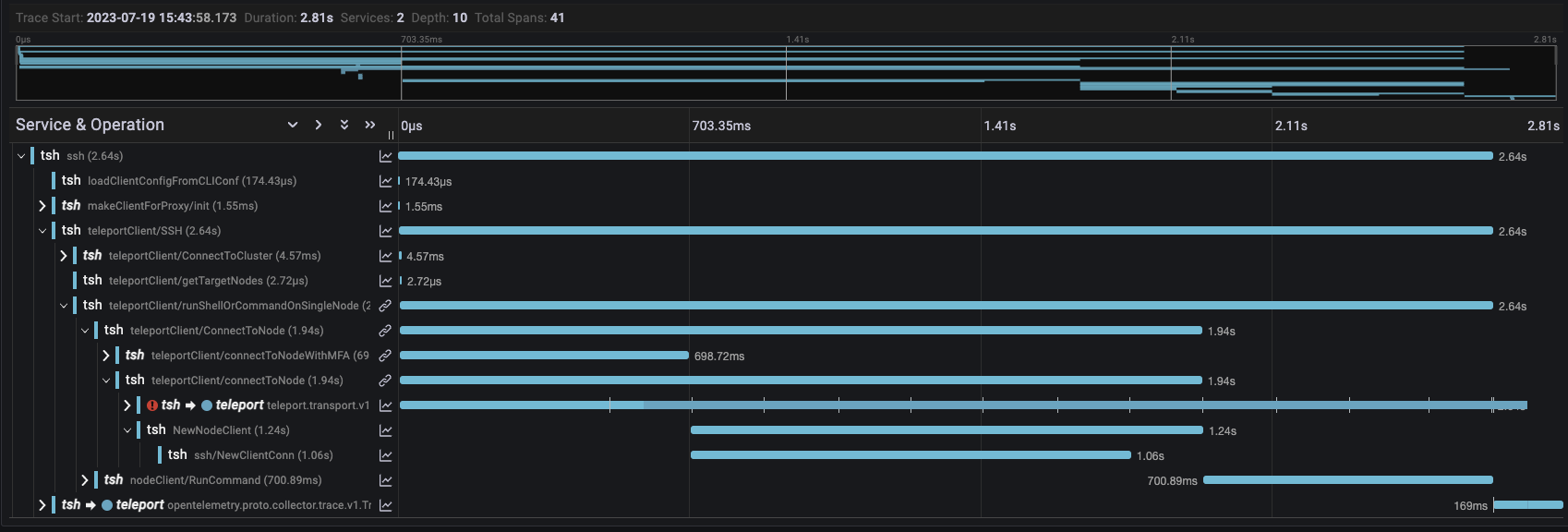

First, we needed to figure out why the connections were slow. To figure out what exactly tsh was doing at every step of the connection we used Distributed Tracing and OpenTelemetry.

Once we had tracing in place we were ready to start gathering data. We ran several commands, e.g., tsh ssh alice@host whoami, with the client, proxy, and target hosts located in different regions around the world:

- Client, Proxy, Host colocated in region a

- Client in region a, Proxy and Host colocated in region b

- Client in region a, Proxy in region b, Host in region c

- Client and Proxy colocated in region a, Host in region c

We noticed that tsh made the same request to the Proxy several times and we could consolidate them into a single request. However, as seen in the trace below the most expensive operations by far were creating SSH connections.

Why are SSH connections slow?

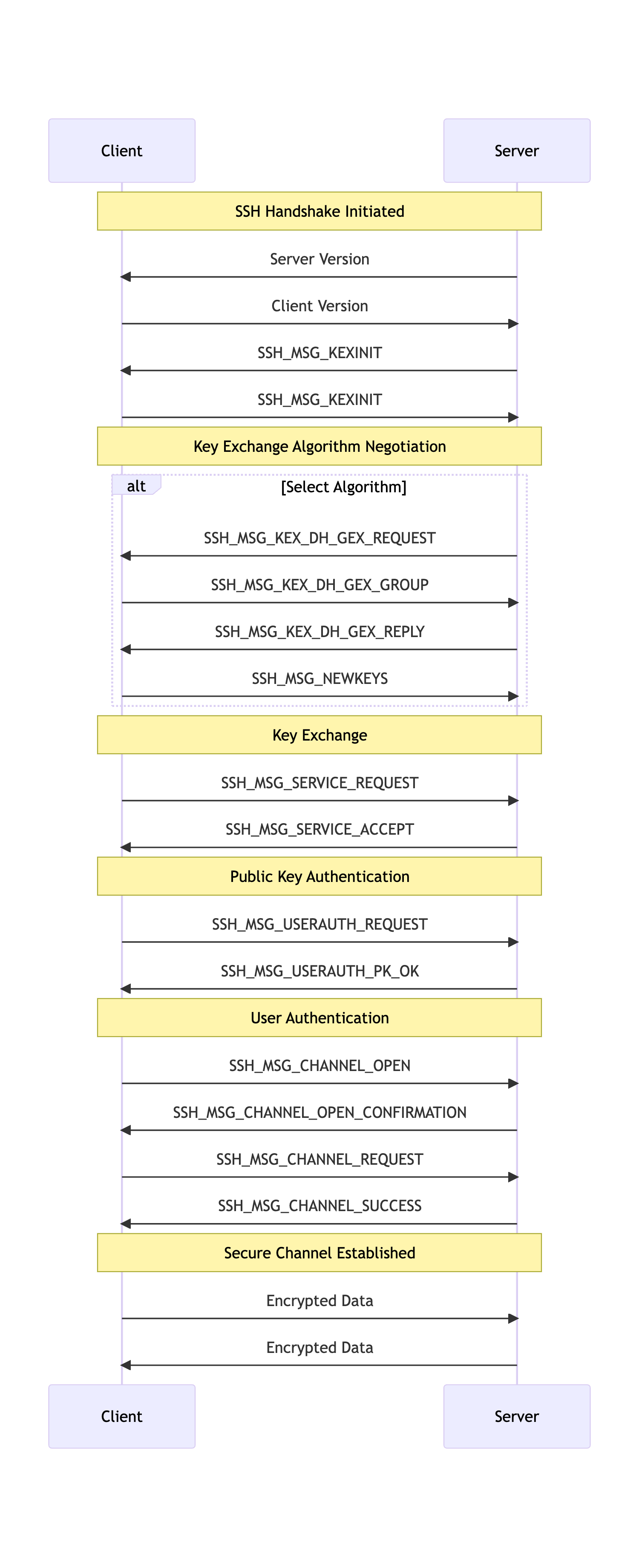

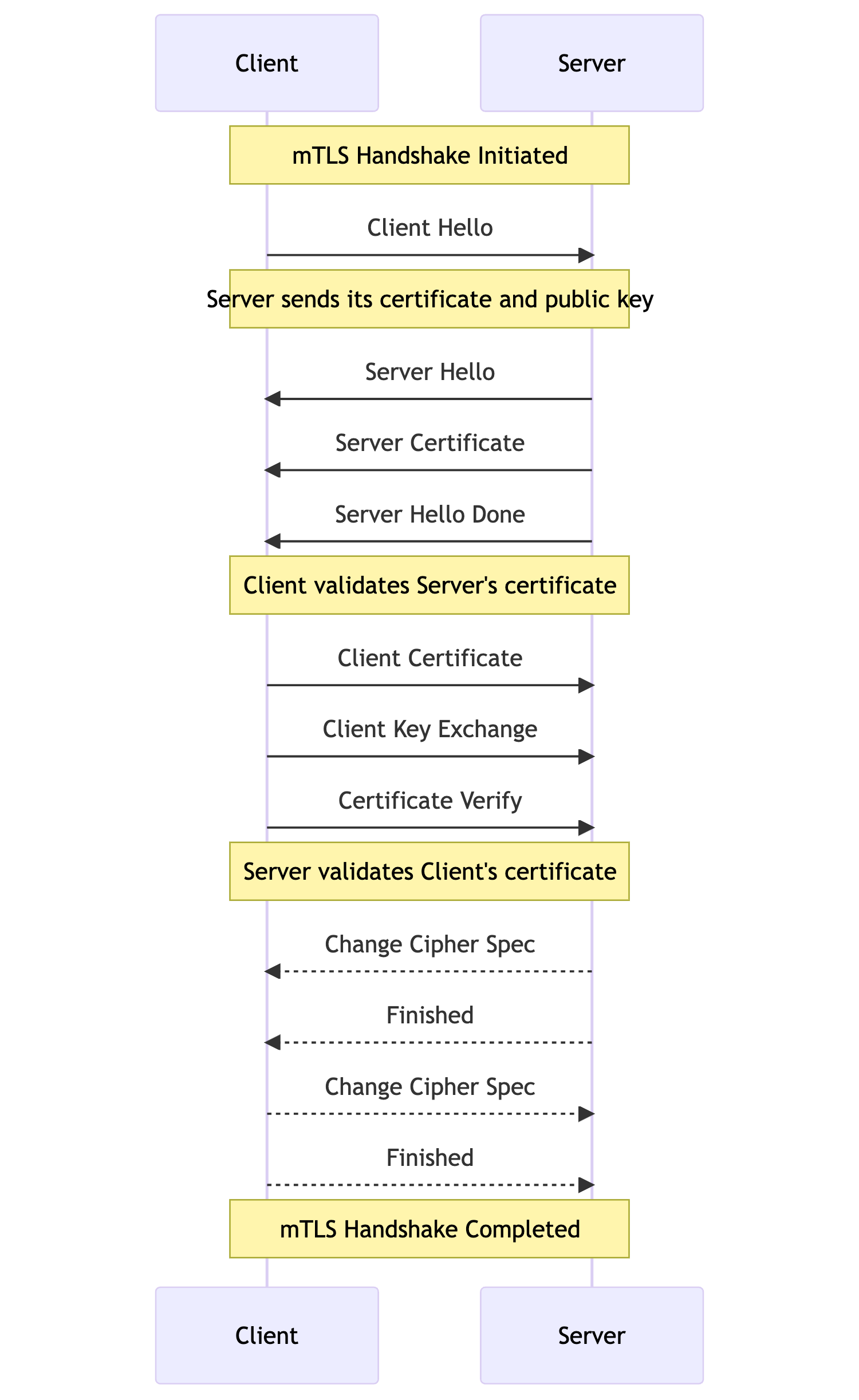

SSH was designed to provide secure remote access to machines, not for service-to-service communication. The protocol was designed to ensure that the connection is secured, that both parties are verified and the user is authenticated before any data is exchanged. This helps achieve two of the fundamental pillars of security: confidentiality and integrity. However, these security guarantees come at the expense of initial connection latency. Each SSH connection is required to complete the SSH handshake before the connection is available.

There are several round trips required for the client and server to exchange all information required to complete the handshake. The slowest portion of the handshake is the mutual key generation process.

Faster SSH connections

Altering the SSH Protocol or implementing a bespoke alternative were not viable options to our problem. So what could we do?

What if we only needed to do a single SSH handshake? The connection between tsh and the Proxy is completely opaque to the user and only used SSH due to legacy reasons. When users login they are already granted and receive SSH and TLS certificates which encode the same information about their role. So, we decided to embark on a journey to replace the SSH connection to the Proxy with one that leveraged TLS.

gRPC and mTLS?

Since we already used gRPC and mutual TLS (mTLS) for other services, it seemed like a natural fit. The SSH channel which proxies the SSH connection to the target could easily be replaced with a bidirectional stream.

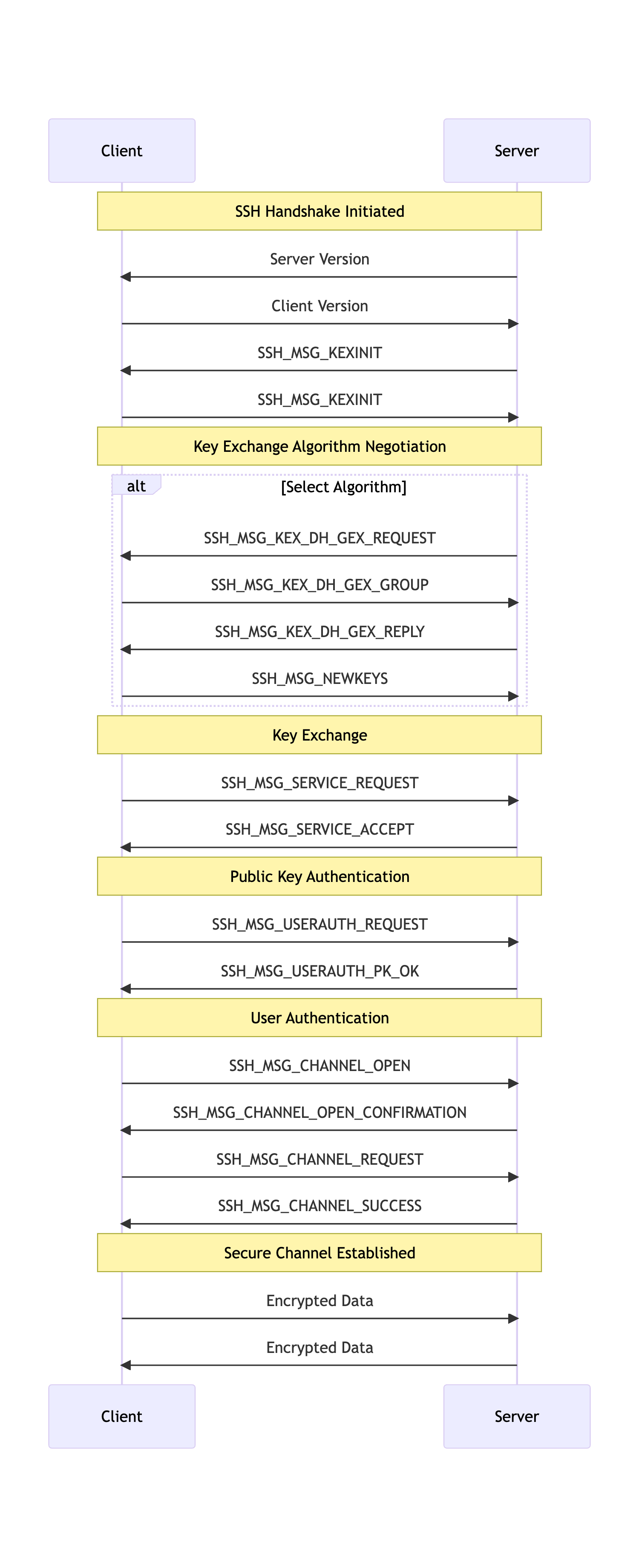

gRPC was designed to be a high performance, low latency RPC framework for service to service communication. It leverages HTTP/2 as the transport mechanism and Protocol Buffers for data serialization to reduce latency and network bandwidth. To provide the same security guarantees as SSH, gRPC can be paired with mTLS. When establishing a connection with TLS there is also a handshake performed similar to SSH; however, these handshakes are much faster.

When comparing to the SSH handshake you can see that the only thing exchanged are certificates which the client and server already possess. TLS was specifically designed to provide secure communication over the internet without adding additional overhead. As adoption of TLS has continued to increase so too have efforts to improve and optimize it. For instance the latest version of TLS, v1.3. introduced a number of improvements to the handshake to further reduce connection latency.

Implementation

In order for this transition to be successful we outlined two major criteria:

- Anything that worked with the old mechanism MUST also work with the new gRPC implementation.

- There should be no new configuration required.

We wanted users to experience faster connections just by upgrading their cluster without impacting their existing workflows. To achieve this the Proxy was updated to be capable of multiplexing SSH and gRPC on the same address to prevent requiring admins to configure a new address and to allow new and legacy versions of tsh to work when communicating with a newer Proxy. The largest hurdle was making sure that everything continued to work the same way that it did with the legacy SSH connection post update to gRPC. This meant that in addition to basic SSH workflows we also needed to support those which leveraged agent forwarding.

To achieve this goal we decided to multiplex SSH and SSH Agent payloads via the same gRPC stream by making use of a oneof to differentiate the data.

By creating a simple io.ReadWriteCloser implementation on top of the gRPC stream, we could easily plug the new transport mechanism into golang.org/x/crypto/ssh.NewClientConn and golang.org/x/crypto/ssh/agent.ServeAgent and everything just worked.

Connecting to a host via gRPC: client.go#L115

Connecting to a host via SSH: client.go#L580

Results

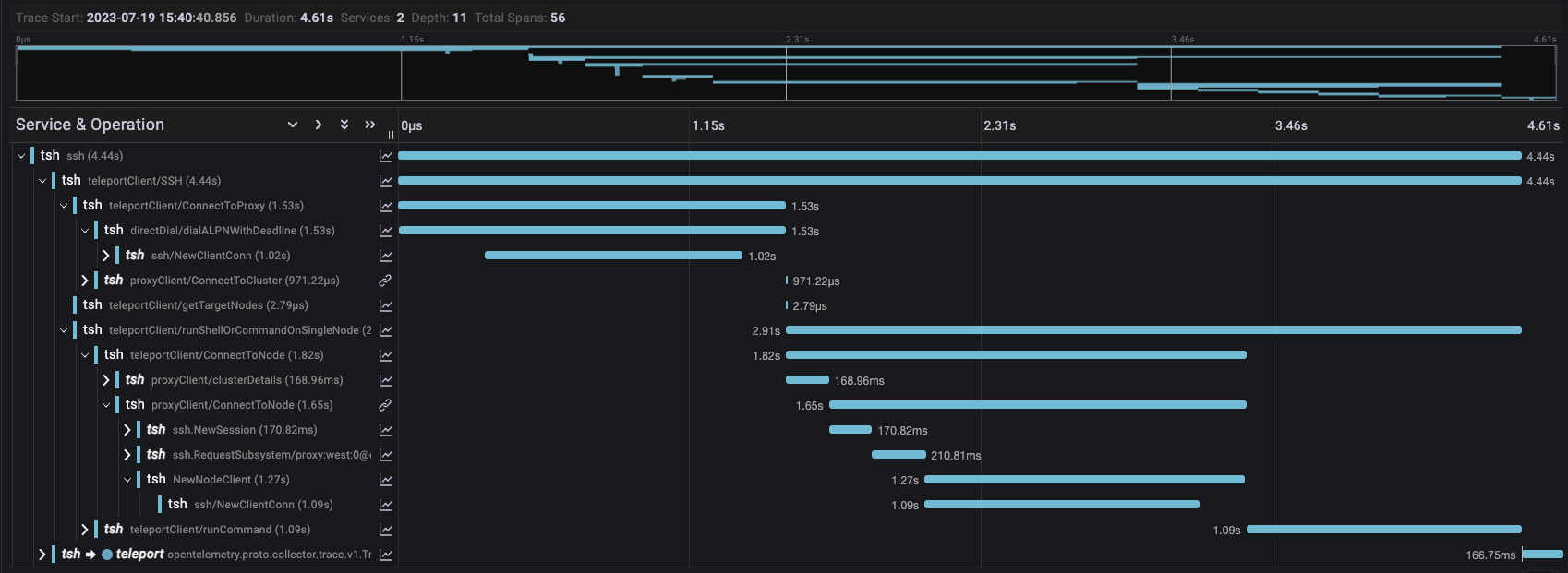

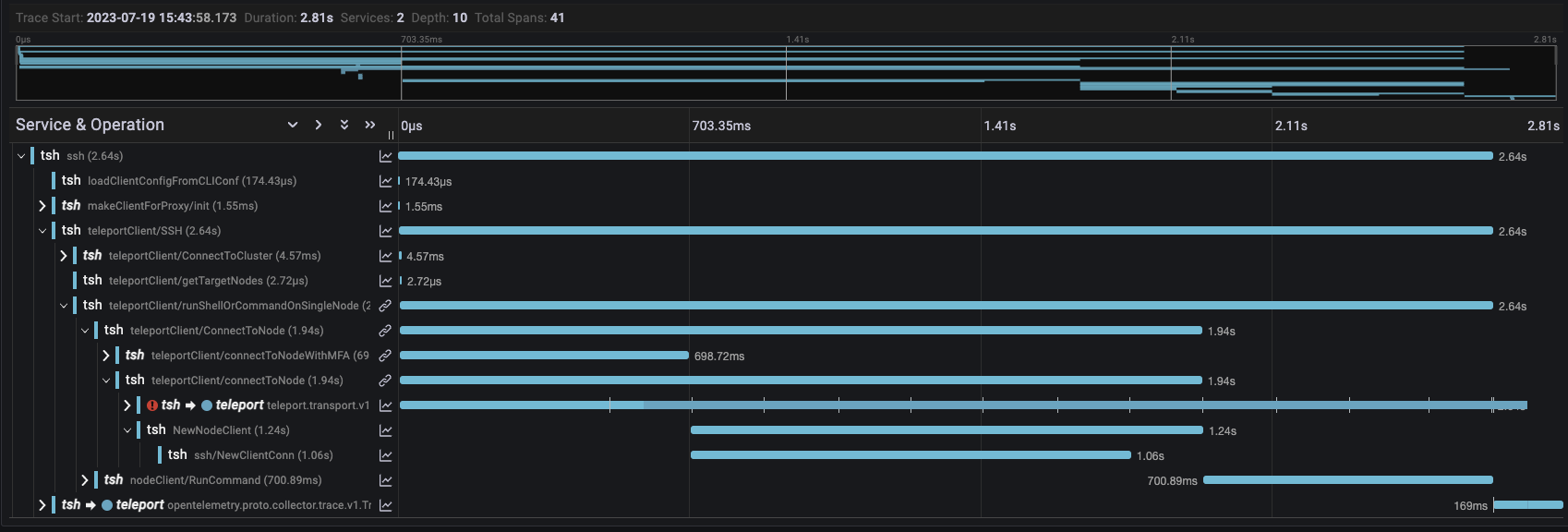

We ran the same tsh ssh alice@host whoami test with the client, proxy and target hosts located in different regions as before to measure the results. As you can see in the trace below the connection to the Proxy is now negligible. Using gRPC improved connection latency by 40% when there is a large distance between the client and proxy geolocation.

The table below shows the times captured from Teleport v12 which uses the legacy SSH connection to the Proxy and Teleport v13 which replaced SSH with gRPC. Even when the client and Proxy were relatively close, replacing SSH with gRPC still helped reduce connection latency by 13%.

| User | Target | Proxy | V12 SSH | V13 gRPC | Reduction |

|---|---|---|---|---|---|

| us-east-1 | us-west-2 | us-west-2 | 2.630s | 1.710s | 34.98% |

| us-east-1 | ap-southeast-1 | us-west-2 | 4.990s | 3.670s | 26.45% |

| us-west-2 | us-west-2 | us-west-2 | 0.698s | 0.562s | 19.47% |

| us-west-2 | ap-southeast-1 | us-west-2 | 3.600s | 2.640s | 13.73% |

| ap-southeast-1 | us-west-2 | us-west-2 | 4.040s | 2.640s | 40.54% |

| ap-southeast-1 | ap-southeast-1 | us-west-2 | 6.610s | 4.700s | 28.90% |

Tags

Teleport Newsletter

Stay up-to-date with the newest Teleport releases by subscribing to our monthly updates.

Subscribe to our newsletter